How to Win With AI Video Ads

Advice from one of today’s greatest paid advertising experts who has worked with Shopify, Coinbase, Character.ai, Pinterest, Lyft and more.

Hi there, it’s Adam. I started this newsletter to provide a no-bullshit, guided approach to solving some of the hardest problems for people and companies. That includes Growth, Product, and company building. Subscribe and never miss an issue. If you’re a parent or parenting-curious I’ve got a Webby Award Honoree podcast on fatherhood and startups - check out Startup Dad.

Questions you’d like to see me answer? Ask them here.

Dan Birdwhistell (🐦whistle) is a highly respected social media paid advertising consultant known for his expertise in building, optimizing, and scaling profitable paid user acquisition programs on Meta and Reddit Ads. He has managed over $800 million in ad spend throughout his career working with the world’ best-known tech companies and high-growth startups including Shopify, Asana, Pinterest, Squarespace, Whatnot, Kalshi, Character.ai, Lyft, Ipsy, Everlywell, Patreon, LinkedIn, Calm, Redfin, Superhuman, IAC, Coinbase, Bumble, and many more.

Check out his popular course on Reforge: Meta Ads for Advanced Practitioners.

In today’s article Dan shares something he’s never shared before: the playbook he’s developed across 100s of hours of experimentation for mastering AI generated video ads. I have never seen anything like this and when I was talking to Dan about it I knew I needed to share it with all of you. This includes lessons learned, prompts, scripts and video output of top performing ad units. Dan readily admits to not knowing anything about modern video editing tools so these are 100% generated via natural language prompting. It’s rare to get such unique insights out of the head of a practitioner who is doing the work right now… but that’s exactly what we did here!

Read today’s newsletter to learn:

Why more and more companies are adding AI generated video to their OKRs

Specific guidance, tips and frameworks Dan has learned through many extended sessions of generating video ads

Where Dan thinks AI video ads are headed, what’s next and where he’s spending his time to maintain his edge

Get access to the exact prompts and scripts he’s developed to create top-performing ads for some of today’s biggest companies!

I’ve always worked at the bleeding edge of paid advertising helping clients try new approaches and set new standards for what’s possible to drive incremental, efficient growth. I’ve worked in every social channel you can imagine—Facebook, Instagram, TikTok, Reddit, YouTube and LinkedIn—and helped clients spend close to $1 billion on advertising.

A few months ago I noticed a new trend emerging with many of my clients: AI-driven advertising. First it was setting goals around trying to leverage AI to create ads to shorten the “ideation - creation - testing - iteration” cycle and then it morphed into OKRs that mandate 50% of ads generated by AI. I saw this trend coming and it was only accelerated by Google’s launch of Veo3.

If you haven’t heard, Veo3 is the latest state-of-the-art AI video generation model that Google introduced in May of this year. The improvement over Veo2 is that it creates high-definition video with perfectly synchronized, native audio (like dialogue, sound effects, ambient noise and background music). And it does all of this from a text or image prompt.

So why are companies setting these new, ambitious goals?

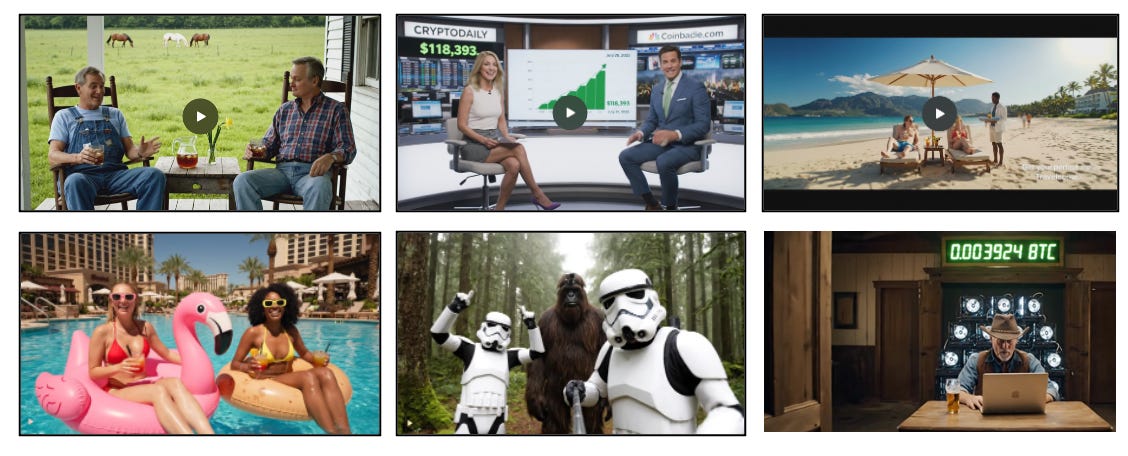

AI ads are winning. By big margins. Ads like these 👇👇👇

In my experiments, UGC-style Veo3 ads are outperforming human advertising by 2x. This is across Meta and Reddit and I’m seeing it on conversion rather than just clickthrough.

Today I’m sharing an early view of the playbook that I’ve developed across hundreds of hours of experimentation with AI video ad creation. It's taken me 50+ hours to get Veo3 even close to dialed-in. It generates amazing settings, actors, voices, body language, etc., but it is very difficult to get both character and voice consistency across 2+ scripts and also physical contexts where the AI doesn't try to get fancy (eg, adding wall art with misspellings, etc.). And rather than share out a single prompt format, I’m including multiple formats to let you mix-and-match to create your own videos.

Read my approach and then take all the prompts for your own use. I find it’s fun to take the longer ones and change a few variables to see what you get. If you do something really interesting, share it in the comments!

Actionable Approaches to AI Video

#1: Lean into Specificity — Especially in Setting and Character

When you outsource ad production to a creative agency or set of influencers, they’ll draft scripts with the level of specificity necessary for the environment, types of actors, etc. That detail will cost you tens-of-thousands of dollars. Leveraging AI you can recreate it yourself, but you have to be overly specific on what you want.

Why it works

Specific details make the video more photorealistic and emotionally resonant. Veo3 taps into Google Street View when you reference real locations which is incredibly powerful. I had completely forgotten about Street View until I asked Veo3 to make a video in the Palermo district in Buenos Aires, Argentina. It dropped the character right there and was shockingly accurate. You’ll get realistic textures and backgrounds; when paired with lifelike characters it creates an immediate sense of authenticity.

There is one major caveat here:

List as many details as are important to you, BUT try to use as few words as possible. At the moment in Veo3, the more words you use, the higher the likelihood that it will either leave key items out or (worse yet!) combine them into something altogether outside of your initial script.

How to Achieve this: SCOA Framework

Setting: Use real-world locations (e.g. “Palermo neighborhood in Buenos Aires” or “Times Square in December”) to boost believability.

Characters: Describe age, clothing, demeanor, voice tone, and even eye color.

Objects: Add grounding props like iced tea pitchers, suitcases, or travel posters.

Voice/Action cues: Include stage directions and vocal inflections. (e.g. “elongation of ‘ee’”). Suggest subtle body language movements as a final touch.

Don’t Forget

If the vibe is crucial (e.g. “super chill” or “whimsical”), state it explicitly.

Example prompt leveraging SCOA

Make a short video that features two older gentlemen in their late 50s or early 60s and they are sitting on the front porch of a 1920s farm house. You can see a big pasture in the background and a few horses in the distance. There’s a little table between the two of them with an iced tea pitcher on it and a daffodil in a vase…

Jack: “Now Phil…have you ever actually seen a Bitcoin out in the wild?!”

Phil: “Well…Jack…I don’t reckon that I have…”

Output

#2: Start with UGC Vibes, Not Studio Polish

When people are swiping through feeds on social platforms they’re drawn to user generated content, not necessarily high production value. Think selfies, bouncing cameras, talking directly to the audience, etc.

Why it works

UGC-style content consistently outperforms polished human-actor ads across Meta and Reddit—especially in conversion rates. It’s more authentic, significantly less expensive and increasingly impossible to tell the difference. Veo3 makes it easy to simulate UGC with high fidelity.

Follow This Approach

Use a first-person perspective, like a vlogger holding a selfie stick.

Add light camera shake or describe handheld movement (e.g., “camera walking backward” or “slight handheld camera motion”).

Skip audio or captions to mimic off-the-cuff TikToks. Most platforms will add captions anyway.

Make the subject speak directly to the camera (and user).

Pro tip:

For now, landscape and square formats (16:9 and 1:1) perform better than vertical (9:16). This is somewhat counter to traditional UGC from influencers so pay attention!

Example prompt leveraging UGC Vibes

Make a first-person video in vlogger style with the subject’s left arm extended like they are holding a camera. She has a selfie stick showing in her hand… She’s walking down a busy street in the Palermo neighborhood in Buenos Aires, Argentina… She’s beaming with happiness and talks rapidly and says: “I had TravelScout.ai plan the perfect itinerary, and it’s been amazing! I’m in Buenos Aires now en route to Patagonia!!! Chao!”

Output

#3: Treat Dialogue Like Sketch Comedy

Writing ad dialogue like sketch comedy is about tight structure, fast pacing and an emotional twist. The goal is to entertain first then sell. When you’re prompting Veo3 you want to write and guide the AI with the same structure and pacing as a short comedic sketch.

Why it works

Snappy, punchy dialogue with a twist or comedic beat engages viewers and creates a mini-narrative arc in under 10 seconds. These moments are highly shareable and often replayed which magnifies your ad spend. They’re memorable (humor sticks), efficient, and shareable because it feels like content and not a sales pitch.

How to Achieve

Set the scene clearly and quickly. Introduce the characters and location with just enough detail to ground the viewer. Think of it like a cold open on SNL or a TikTok skit — no exposition needed. E.g.: “Two farmers rocking on a porch. One’s smirking.”

Use a clear setup line. This is your tension, misdirection, or a question that sets up the punchline. E.g.: “Now Phil, have you ever actually seen a Bitcoin out in the wild?”

Deliver a punchline or unexpected twist. Make sure this is surprising, absurd and/or emotionally exaggerated. If you can do all three that works even better. But keep it short. You’re trying to capture this entire action within the ~8 second limitation of Veo3. E.g.: “That’s ‘cause you’re broke!” (followed by laughter).

Use reaction for emphasis. Think about the best comedy you’ve seen. A really great punchline is usually followed by a deadpan stare, some awkward silence or over-the-top response.

Pro tip

Even single-line twists (e.g. “NO! I bought you more Bitcoin!”) can carry an entire ad if delivered well.

Example Prompt

The woman has her right hand behind her back, seemingly concealing flowers… He says, “Did you bring me FLOWERS?!” and she waits for a second and pulls her phone out from her back showing his Crypto portfolio and she yells, “NO! I bought you more Bitcoin!”. The husband runs across the room and jumps on her saying, “Yes! I love you! MORE Bitcoin!”

Output

#4: Use the “Trip in a Box” Visual Metamorphosis

Visual Metamorphosis refers to a storytelling technique where a mundane, everyday setting is transformed (visually and suddenly) into an aspirational fantasy or ideal experience. It’s essentially using AI to simulate magic realism in video form.

Why it works

AI video generation excels at magical realism without costing a ton of money through fancy CGI usage. Transformation scenes break expectations and help reinforce product value in visual form. This works well because it draws people in, you see the transformation in real-time, and they’re near impossible to pull off with traditional video formats.

How to Achieve in Two “Acts”

The Setup - start with an ordinary setting (e.g. office or home). Make it realistic, ordinary, boring and relatable.

Introduce a magical object or trigger (e.g. glowing globe, app command).

Add stage directions or cues like the “glowing globe” or the “melting floor.”

Describe a rapid visual transformation / metamorphosis into an aspirational scene. This should be triggered by the object.

End with dialogue or CTA and a strong visual payoff.

Pro tip

ASMR-style audio (e.g., whooshes, waves) pairs well with transformation effects and reinforces immersion.

Example Prompt

The travel agent leans over towards the couple and the world globe begins to light up. She says, “How about somewhere tropical?!” The globe emits a burst of light and the room transforms into a tropical beach, with the couple now in lounge chairs with drinks. The man says, “Perfect!” and they toast their drinks with a ‘clink’ sound.

Text overlay: “Get your perfect trip on Travelscout.com”

Output

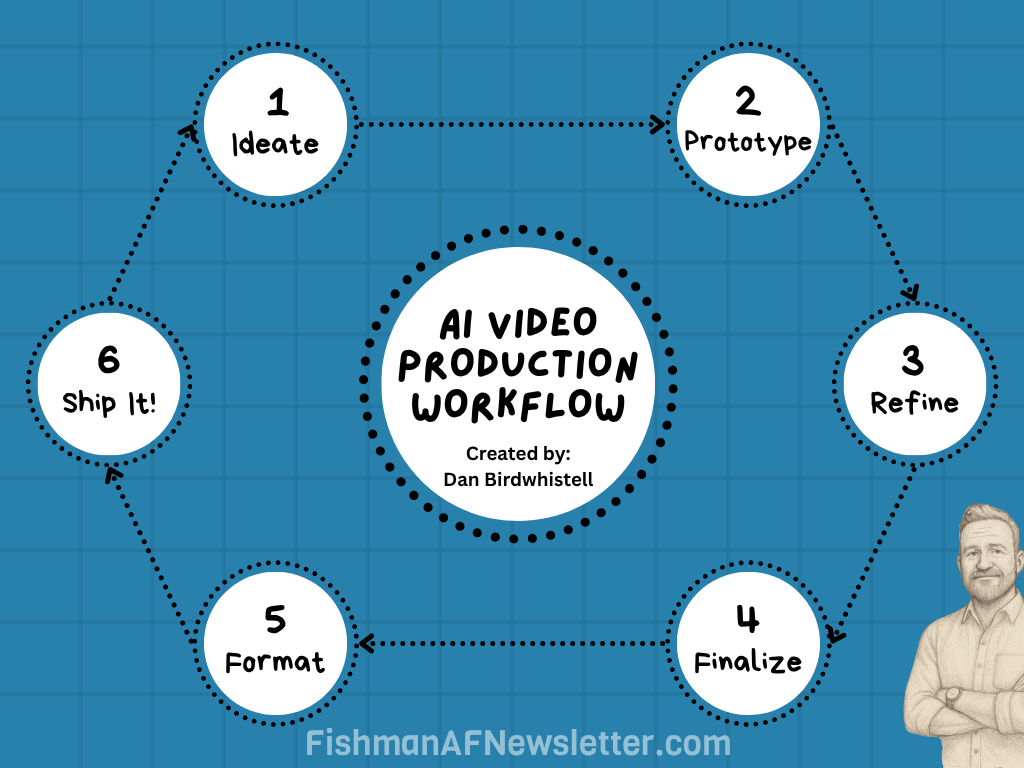

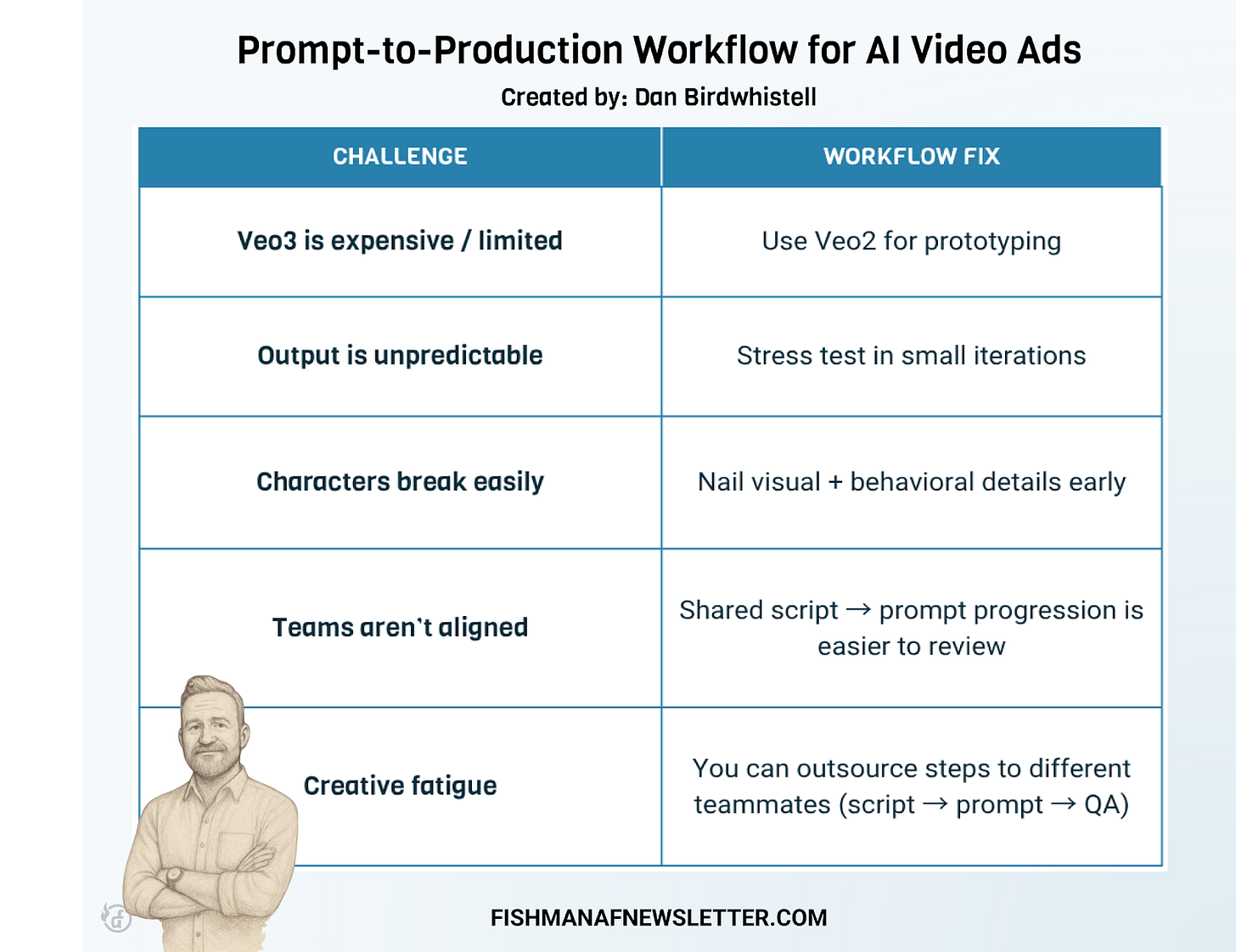

#5: Build a Prompt-to-Production Workflow

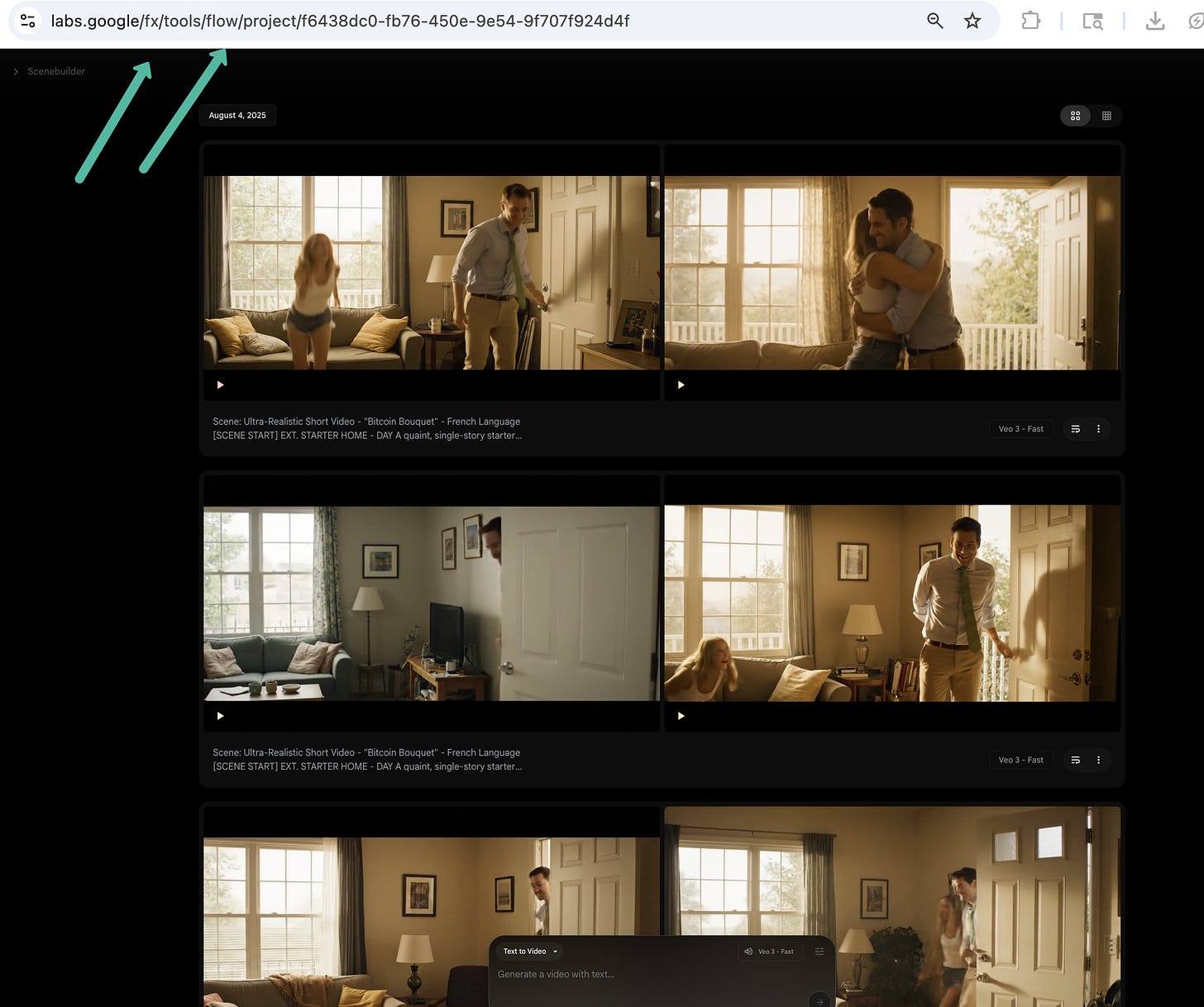

One of the fundamental limits of Veo3 is that you can only create a few videos per day. Google limits Ultra users ($250/mo) to 5 videos per day, so you have to get creative to unlock higher levels. You’ll need them because it tends to take 3-6 attempts to get a single good output.

Note: As of this writing there is a workaround to get more access to Veo3 navigate: to the “Flow” workspace and choose Veo3 as the model. This only works if you have the “Ultra” or “Pro” memberships. Doing this allows you to generate multiple versions paying only ~$0.20 per video. That’s a pretty significant change and I don’t know how long it will last. So the remainder of this section is a foolproof workflow that doesn’t require workarounds.

Check it out:

Why it works

You need a multi-stage creative process to help you get consistently high-quality AI video outputs without wasting generation credits or time. Generating with Veo3 (or any AI video model) isn’t a one-and-done deal. Even the best tools are still quite noisy and require iteration.

How to Achieve: Looped Production Workflow

Ideate creative concepts and hooks first — no need for polish early. Here you’re developing scripts and scenarios like a creative director. You’re not prompting (yet); you’re outlining character, setting, mood and dialogue. Ask yourself:

What emotion are we trying to evoke?

What moment is the “ad climax?”

Are you going for pure UGC, sketch, magic, or something else?

“Prototype” in Veo2 to test visuals and structure. Since Veo2 has fewer or no limits you can hang out here all day if you need to. You won’t get audio unfortunately, but you can answer questions like:

Does the scene render correctly?

Do the characters behave as expected?

Is the tone (funny, serious, magical) coming through?

Note: Here you can also troubleshoot body language and weird background hiccups.

Refine and polish your prompt to prepare for Veo3. After you’ve tested extensively in Veo2 you can take the best performing versions and optimize for Veo3. This means:

Tighten up descriptions (e.g. “woman with freckles” instead of “attractive woman.”)

Get specific on visual anchors (e.g. “left arm is extended holding a selfie stick.”)

Be precise about tone, character reactions, and camera behavior.

Add in dialogue timing with timestamps, voice inflection cues, and specific prohibitions (e.g. “no captions.”)

Finalize in Veo3 with detailed prompt formatting. This is it and you should expect you’ll nail it within 3-6 attempts. Use all your polish from Step 3 and be picky because this is your actual output.

Format and finalize. Once you’re done export it in multiple aspect ratios (remember 16:9 and 1:1 are performing best right now). If you’re really adventurous (I’m not) you can pull the videos into CapCut or After Effects to do some post production like light music, a brand watermark, or subtle text overlay/CTA.

Pro tip

Empower non-brand folks across your org to experiment — creativity hides in surprising places.

Example Prompt

Draft in Veo2: “Make video of two news anchors on a CNBC finance show talking about the impressive growth in Bitcoin: ‘Bitcoin is back! Ready to get in?!’”

Final in Veo3: Add hair color, outfit, emotional tone, specify no captions, and confirm voice fits all dialogue. Test until you get one clean output with all constraints met.

Output

Why is this workflow so helpful?

Where Does This Go Next?

I’ve seen the evolution of Veo and AI video generation capabilities since Sora launched in December, 2024. The pace is accelerating and here are some of my predictions for the next year.

Creating Real, Imperfect People

Right now, Veo3 is 85% “there” with respect to generating characters that are indistinguishable from humans. It’s great at nailing the whole person in terms of voice, body, and movements; however, it’s still missing the subtle imperfections (hair out of place, rosy cheeks, slouched shoulders) that signal to us that we’re looking at a real human. I’d anticipate this gets solved before the start of Q4.

Pulling in Physical Product Integration

The biggest limitation of these tools at the moment is it’s almost impossible to get a specific product to render properly in a larger scene – and it’s even more difficult to get text overlays to use proper spelling. We know Meta and a handful of video companies have tools to animate product photos, but none of them have come close to doing this inside a larger video. We predict that someone cracks this before we get too deep into Q4 – this is the first time in a while that Black Friday / Cyber Monday is probably going to look very, very different from years previous.

Success with Short, Highly-Specific Action Requests

Influencers and creative agencies have always over-indexed on creating 30s - 60s videos with tons of information – but (sadly) almost no one watches the full video. I’m having the most success with 0:03 -0:08 videos that ask for a low-friction, quick action from the user. For example: “Tap to update your [APP NAME] app” or “Tap below to claim your username.” I expect this strategy will continue to be effective.

Video Generation Enriched with Real-time Data Feeds

At Coinbase, for example, I’m trying to crack generating videos with near-real-time updates on Bitcoin and Ethereum price quotes. This will be key to driving urgency and timeliness to increase conversion. Looking forward, we should expect this to quickly evolve into tools that create custom offers or deals for micro-targeted audiences. You could imagine an MCP server that pulls in real-time prices when generating ads.

Automated Scene-Stitching and/or 0:30 Options

The existing Flow Veo2 UX makes it easy to create multiple videos at a time under a single project header. We anticipate that in short order, Google will allow for both longer outputs (hopefully up to 0:30) and also add some basic editing and scene-stitching tools to the existing Flow console. I think there are lots of people like me who will get proficient at making videos, but are clueless when it comes to editing or building a longer storyline with multiple scenes.

Copy As Captions, Not Introduction

The text around the videos will increasingly become part of the product or service description much in the same way we historically use captions. I’ve already managed to cut out 80% of the copy used with agency-developed video ads and I expect this to continue.

Small Company Breakouts Start to Happen All the Time

Some of the world’s most creative people are small business owners. And as they become familiar with these tools we’ll likely see many small companies become breakout hits with a single creative video. Historically these companies didn’t have the budget for a creative agency or a bunch of influencer contracts. Now they can make something that could take them from $500k/year in revenue to selling out their full warehouse overnight. We’ve also found that the best video ideas come from people who are often the furthest from existing internal creative services groups.

—

Ready to get started? Good luck in making your first videos! Here are a few, final tips:

Make sure you set aside 1-2 hour blocks to really get in the zone: I typically need to do 5-6 iterations of a specific concept before I find something that’s workable – and then another 8-10 iterations to perfect the dialogue.

Both Veo2 and Veo3 save your prompt and workflow history, though, so don’t be afraid to pause and revisit concepts at a future time when you’re in a different mindset. Producing crisp, vibrant, and emotionally resonant videos often takes just as much obsessive attention to detail as it does wild-eyed creative concepting.

Be sure to share your works-in-progress with friends and colleagues as you go. Even though group input is typically the kryptonite of creativity with Veo3 it takes just a moment or two to try out everyone’s ideas!

Have fun, and we’re looking forward to seeing what you create. Share your work in the comments below!

Get ALL My Prompts and Scripts

Want the full document with all of my prompts and scripts? Fill out the brief form at the link below and we’ll send it to you!

Wow, this is Gold, Dan & Adam! I want to try this today - this post should have 500 likes! BTW, does the airtable form to get promots still work? I signed up yesterday but didn't receive an email yet

Thanks for writing this one Adam! You keep us at the cutting edge of how to grow in this new age